ChatGPT Integration with Wildix PBX

This Guide describes how to integrate ChatGPT with Wildix.

Created: February 2023

Updated: January 2024

Permalink: https://wildix.atlassian.net/wiki/x/AQBxC

Introduction

ChatGPT is a state-of-the-art language model developed by OpenAI, designed to generate human-like responses to natural language queries. It is built using deep learning techniques and trained on a massive amount of text data, which allows it to understand the nuances of human language and generate relevant and coherent responses.

One practical application of ChatGPT is providing real-time technical support. With ChatGPT, companies can set up chat interfaces that allow users to ask questions about their products or services. ChatGPT can then respond to those questions with helpful answers or provide guidance to users.

Integrating ChatGPT with chatbots can enhance the functionality and responsiveness of the bot. Chatbots may not always have the correct answer to every question or situation. By integrating ChatGPT with a chatbot, the chatbot can access the vast knowledge base of ChatGPT and provide more comprehensive and accurate responses to users.

ChatGPT can be integrated as:

- Chatbot integrated in WebRTC Kite Chatbot - you can use it a personal assistance in Collaboration/ x-bees or use it as a Kite chatbot for external users

- Speech To Text (STT) integration

Related sources:

- Wildix licensing

- For the primary settings of Kite Chatbot, consult Wildix WebRTC Kite Admin Guide

- Wildix Business Intelligence - Artificial Intelligence services

- OpenAI API documentation: https://platform.openai.com/docs/introduction

- To learn more about ChatGPT or the Kite Chatbot, you can watch the Wildix Tech Talk: Maximizing Efficiency with ChatGPT Webinar

Requirements

- Min. WMS version: starting from WMS 5.02.20210127

- Minimum Business license or higher (assigned to the chatbox user)

- Premium license for STT integration

- Last stable version of Node.js

Installation

- Download the archive: kite-xmpp-bot-master.zip

- Open the archive, navigate to /kite-xmpp-bot-master/app and open config.js with an editor of your choice

Replace the following values with your own:

- domain: 'XXXXXX.wildixin.com' - domain name of the PBX

- service: 'xmpps://XXXXXX.wildixin.com:443', - domain name of the PBX

- username: 'XXXX', - Kitebot user extension number, do not change it

- password: 'XXXXXXXXXXXX' - Kitebot user password

- authorization: 'Bearer sk-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX', - ChatGPT authorization token

- organization: 'org-XXXXXXXXXXXXXXXXXXXXXXXXX', – ChatGPT organization ID

- model: '

gpt-3.5-turbo', - this parameter specifies which GPT model to use for text generation. gpt-3.5-turbo point to the latest model version (e.g. gpt-3.5-turbo-0613). GPT-3.5 models can understand and generate natural language or code. It is the most capable and cost effective model in the GPT-3.5 family which can return up to 4096 tokens - temperature: 0.1, - this parameter controls the "creativity" of the generated text by specifying how much randomness should be introduced into the model's output

- externalmaxtokens: 250, - response token limit for Kite users contacting the chatbot

- internalmaxtokens: 500 - response token limit for internal users contacting the chatbot

systemcontent: 'Be a helpful assistant. Provide accurate but concise response.', - this parameter can be used as an instruction for the model. Define text style, tone, volume, formality, etc. here

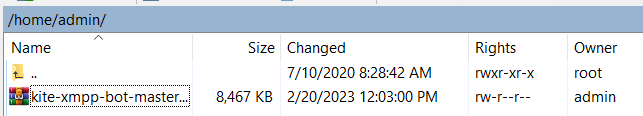

- Upload the archive to the /home/admin/ directory using WinSCP or any alternative SFTP client

- Connect to the web terminal. Log in as the super user via the su command, password wildix

Install nodejs:

apt-get install nodejs unzip

Unzip the archive:

unzip /home/admin/kite-xmpp-bot-master.zip

Copy the chatbot folder to /mnt/backups:

cp -r ./kite-xmpp-bot-master /mnt/backups/

Move the chatbot.service.txt file to the appropriate directory and enable chatbot as a service to run in the background, then start the service:

cp /mnt/backups/kite-xmpp-bot-master/chatbot.service.txt /etc/systemd/system/chatbot.service systemctl enable chatbot.service systemctl daemon-reload systemctl start chatbot.service

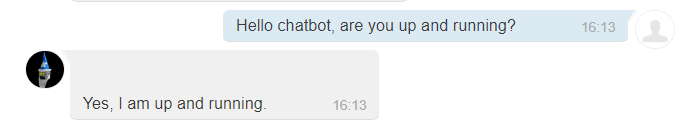

Verify that the chatbot is running by either running the ps command:

ps aux | grep node

Or simply send the bot a message

The service sends standard and error output to wms.log so you can check /var/log/wms.log for debug if need be.

STT Dialplan configuration example using ChatGPT and speech recognition

Use case: to interact with ChatGPT via voice and have a response sent via TTS to the caller.

How-to:

- Download the Dialplan dialplan_2024.01.29_11.24.09_testupgrade_6.05.20240119.1_2211000083f3.bkp

- Go to WMS Dialplan -> Dialplan rules

- Click Import to add downloaded Dialplan and click Apply

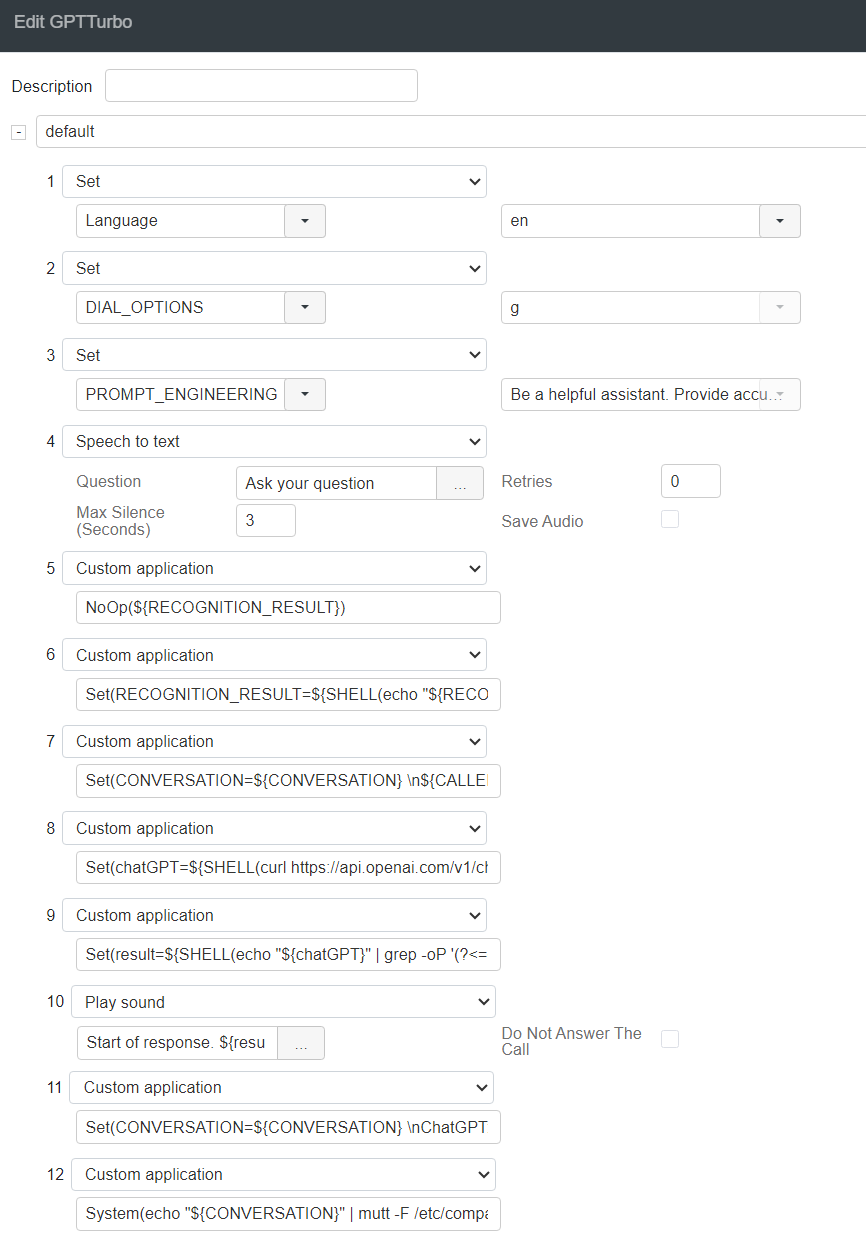

Dialplan applications explained:

- Set STT & TTS language

Set DIAL_OPTIONS -> g to continue Dialplan execution after the Speech to text application, this allows looping the Dialplan to potentially answer multiple questions

Set PROMPT_ENGINEERING -> Be a helpful assistant. Provide accurate but concise response. - this parameter can be used as an instruction for the model. Define text style, tone, volume, formality, etc. here

Speech to text application that plays the voice prompt and captures the user’s response converting it to text. An in-depth guide for the STT Dialplan application: Dialplan applications Admin Guide

NoOp(${RECOGNITION_RESULT}) - a debug application that shows the result of speech recognition, can be safely removed

Set(RECOGNITION_RESULT=${SHELL(echo "${RECOGNITION_RESULT}" | tr -d \'):0:-1}) - this sets the RECOGNITION_RESULT variable to the value of the RECOGNITION_RESULT channel variable, with any single quotes removed. The :0:-1 substring removes the newline character

Set(CONVERSATION=${CONVERSATION} \n${CALLERID(name)} ${CALLERID(num)}: ${RECOGNITION_RESULT}) - creating a variable that stores the conversation between the user and ChatGPT

Set(chatGPT=${SHELL(curl https://api.openai.com/v1/chat/completions -H "Content-Type: application/json" -H "Authorization: Bearer sk-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX" -d '{ "model": "gpt-3.5-turbo", "messages": [ {"role": "system", "content": "${PROMPT_ENGINEERING}"}, {"role": "user", "content": "${RECOGNITION_RESULT}"} ], "temperature": 0.8, "max_tokens": 500 }' | tr -d "'()"):0:-1}) - this sends an HTTP request to the OpenAI ChatGPT API to generate a response based on the RECOGNITION_RESULT and PROMPT_ENGINEERING variables. The resulting JSON string is assigned to the ChatGPT variable. gpt-3.5-turbo is the model name, you can learn more about ChatGPT models by watching the dedicated Tech talk webinar or in the OpenAI documentation. Replace Bearer sk-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX with your API token

Set(result=${SHELL(echo "${chatGPT}" | grep -oP '(?<=content).*'):0:-1}) - this extracts the response text from the chatGPT variable by using grep to search for the text following the “content“ field.

Play sound -> ${result}. End of response. - this uses the text in the result variable to generate text to speech message and play back the response from chatGPT API to the caller, appending “End of response” to mark the end of response, which is something you can change to your preference

Set(CONVERSATION=${CONVERSATION} \nChatGPT: ${result}) - this app add the AI’s response to the conversation variable created earlier

System(echo "${CONVERSATION}" | mutt -F /etc/companies.d/0/Muttrc -s "ChatGPT ASR interaction" test@test.com) - this app is used to email the conversation to an email address; replace test@test.com with the address you want it to send to. This is optional and can be safely removed without any impact to the STT part of the Dialplan

Example callweaver output for debug purposes:

-- Executing [s@GPTTurbo:1] NoOp("SIP/26861-00000005", "Executing 'Set': LANGUAGE() - en")

-- Executing [s@GPTTurbo:1] NoOp("SIP/26861-00000005", "Executing 'Set': DIAL_OPTIONS - g")

-- Executing [s@GPTTurbo:1] NoOp("SIP/26861-00000005", "Executing 'Set': PROMPT_ENGINEERING - Be a helpful assistant. Provide accurate but concise response.")

-- Executing [s@GPTTurbo:1] NoOp("SIP/26861-00000005", "Executing 'Speech to text': question - "Ask your question", error message - "Didn't understand, please repeat", retries - 0, lenght - 10, silence - 3, save audio - "

no"")

-- Executing [s@GPTTurbo:1] NoOp("SIP/26861-00000005", "Ask your question")

-- Executing [s@GPTTurbo:1] Playback("SIP/26861-00000005", "/tmp/tts_b8b61b2a4e90ec49477453f8fd49a109")

-- <SIP/26861-00000005> Playing '/tmp/tts_b8b61b2a4e90ec49477453f8fd49a109.slin' (language 'en')

-- Executing [s@GPTTurbo:1] Record("SIP/26861-00000005", "/tmp/stt_7654.wav,3,10,ky")

-- <SIP/26861-00000005> Playing 'beep.opus' (language 'en')

-- Executing [s@GPTTurbo:1] NoOp("SIP/26861-00000005", "Recognition result: what does a 403 error code mean in voice over IP")

-- Executing [s@GPTTurbo:1] NoOp("SIP/26861-00000005", "what does a 403 error code mean in voice over ip")

-- Executing [s@GPTTurbo:1] Set("SIP/26861-00000005", "RECOGNITION_RESULT=what does a 403 error code mean in voice over ip")

-- Executing [s@GPTTurbo:1] Set("SIP/26861-00000005", "CONVERSATION= \nKonstantin 26861: what does a 403 error code mean in voice over ip")

[Jan 29 11:37:00] ERROR[2107]: logger.c:2111 stderr_write_thread: STDERR: % Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 969 100 689 100 280 449 182 0:00:01 0:00:01 --:--:-- 632

-- Executing [s@GPTTurbo:1] Set("SIP/26861-00000005", "chatGPT={

-- "id": "chatcmpl-8mKEeyt70u4eXqKQ0w7pKmaf6kPQX",

-- "object": "chat.completion",

-- "created": 1706528220,

-- "model": "gpt-3.5-turbo-0613",

-- "choices": [

-- {

-- "index": 0,

-- "message": {

-- "role": "assistant",

-- "content": "The 403 error code in Voice over IP VoIP typically indicates a \"Forbidden\" error. This means that the server understood the request, but the user doesnt have permission to access the requested res

ource or perform the requested action."

-- },

-- "logprobs": null,

-- "finish_reason": "stop"

-- }

-- ],

-- "usage": {

-- "prompt_tokens": 34,

-- "completion_tokens": 48,

-- "total_tokens": 82

-- },

-- "system_fingerprint": null

-- }")

-- Executing [s@GPTTurbo:1] Set("SIP/26861-00000005", "result=: The 403 error code in Voice over IP VoIP typically indicates a "Forbidden" error. This means that the server understood the request, but the user doesnt have

permission to access the requested resource or perform the requested action.")

-- Executing [s@GPTTurbo:1] NoOp("SIP/26861-00000005", "Executing 'Playback': sound - "Start of response. : The 403 error code in Voice over IP VoIP typically indicates a \"Forbidden\" error. This means that the server un

derstood the request, but the user doesnt have permission to access the requested resource or perform the requested action.. End of response.", waitDigits - n, opts - ")

-- Executing [s@GPTTurbo:1] Playback("SIP/26861-00000005", "/tmp/tts_be0f2125fbd29e48987821d9dc475480,")

-- <SIP/26861-00000005> Playing '/tmp/tts_be0f2125fbd29e48987821d9dc475480.slin' (language 'en')

-- Executing [s@GPTTurbo:1] Set("SIP/26861-00000005", "CONVERSATION= \nKonstantin 26861: what does a 403 error code mean in voice over ip \nChatGPT: : The 403 error code in Voice over IP VoIP typically indicates a "For

bidden" error. This means that the server understood the request, but the user doesnt have permission to access the requested resource or perform the requested action.")

-- Executing [s@GPTTurbo:1] System("SIP/26861-00000005", "echo " \nKonstantin 26861: what does a 403 error code mean in voice over ip \nChatGPT: : The 403 error code in Voice over IP VoIP typically indicates a "Forbidd

en" error. This means that the server understood the request, but the user doesnt have permission to access the requested resource or perform the requested action." | mutt -F /etc/companies.d/0/Muttrc -s "ChatGPT ASR interact

ion" test@test.com")

-- Auto fallthrough, channel 'SIP/26861-00000005' status is 'UNKNOWN'